How to Perform Seamless Snowflake Integration?

Recently, Salesforce ran into major integration challenges when acquiring Slack for $27.7 billion. The goal was to combine Slack’s messaging platform with Salesforce’s CRM data to create a unified customer engagement solution.

But stitching together the data ecosystems of the two companies required navigating complex technical integrations. Salesforce found itself spending heavily on integration services and consultants. The projects ran months behind schedule resulting in massive losses.

If you are undertaking any large-scale data integration initiative, you can relate to Salesforce’s woes. Whether it is consolidating siloed data, connecting apps, or sharing insights – integration is riddled with complexities. It can stall your digital transformation plans.

But what if you could tap into a versatile platform built ground-up for flexibility, scalability and seamless Snowflake integration? Enter Snowflake.

With extensive native integration features and third-party connectors, Snowflake is designed to tackle the toughest integration challenges.

This guide will explore Snowflake integration in-depth – covering key mechanisms, architectures, tools, and techniques to make your integration smooth and future-ready.

Snowflake API integration is a crucial aspect of this, allowing for secure and scalable connections to external systems.

Let’s dive into the world of Snowflake integration and discover how it can transform your organization!

An Overview of Snowflake Integration

Snowflake integration refers to connecting Snowflake’s cloud data platform with other systems, applications, or tools to efficiently collect, transform, analyze and share data. The key aspects of Snowflake integration include:

- Sourcing Data: Ingesting data from diverse sources like SaaS applications, on-prem databases, cloud storage, APIs, IoT devices etc. Snowflake provides robust data ingestion options for Snowflake integrations with various sources.

- Transforming Data: Cleansing, enriching and processing ingested data to make it analysis-ready. Snowflake allows data transformation during loading using SQL queries and functions, enabling seamless Snowflake API integration with external systems.

- Loading Data: Migrating processed data into Snowflake data warehouse for analysis. Snowflake offers parallel and continuous loading mechanisms like COPY, PUT and Snowpipe, facilitating Snowflake integration with diverse data sources.

- Consuming Results: Connecting BI tools, dashboards and external applications to consume Snowflake data and insights. Snowflake enables seamless data sharing across accounts and cloud platforms, promoting Snowflake integrations with various tools and applications.

- Orchestration: Automating end-to-end workflows encompassing data extraction, processing, loading, analysis and more using orchestration tools, which can be achieved through Snowflake API integration with automation platforms.

With the right Snowflake integration strategy, Snowflake can become the central data hub for your organization – systematically collecting, refining, relating, and sharing data to create a single source of truth. Let us look at some critical components of Snowflake integrations in detail:

Snowflake Integration Components

- Connectors and Drivers

Snowflake supports connectivity using standard interfaces like ODBC, JDBC, ADO.NET, Python, and Node.js. It provides official connectors and drivers for these interfaces to integrate with programming languages and BI/analytics tools.

For example, Snowflake Connector for Spark allows querying Snowflake data directly from Spark clusters. The ODBC driver enables connectivity with BI tools like Tableau. These eliminate the need for custom coding by providing out-of-the-box integration capabilities.

- Partner Connectors

In addition to official connectors, Snowflake also has a thriving partner ecosystem providing 150+ third-party connectors and integrations for data sources, ETL/ELT platforms, BI tools and more.

These connectors simplify connecting SaaS applications like Salesforce and Marketo to Snowflake. Popular ETL tools like Informatica, Talend, Matillion ETL provide specific Snowflake connectors to accelerate loading and transformation.

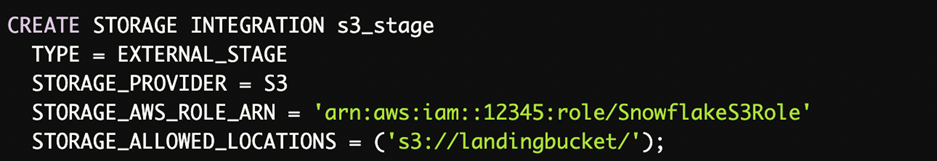

- Storage Integrations

Snowflake storage integrations create a secure interface with external cloud storage like S3, Azure Blob or GCP Storage. They enable loading and unloading data without directly exposing credentials.

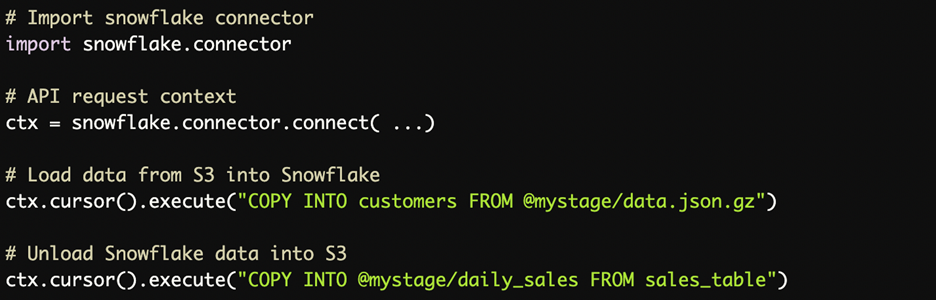

For example:

This allows secure access to the S3 bucket using the specified IAM role.

- Data Sharing

Snowflake Data Sharing allows instant access to data across accounts, platforms, and cloud providers without data replication.

For example, you can securely share Snowflake data with business partners or subsidiaries having their own Snowflake accounts. This simplifies data integration between organizations.

- Snowpipe

Snowpipe is Snowflake’s continuous data loading capability. It streams micro-batches of data into Snowflake automatically as files arrive in the source location.

For example, you can configure Snowpipe to load data from Azure Event Hubs into Snowflake as events are published to Event Hubs. This enables real-time data integration.

- Streams and Tasks

Snowflake Streams and Snowflake Tasks allow query-based ETL and orchestration of data pipelines.

Streams capture changes to Snowflake tables like new inserts or updates. SQL queries on Streams tables deliver change data for processing.

Tasks enable creating chains of SQL queries that run sequentially and can be scheduled using cron triggers. They are useful for orchestrating actions post data loading.

- External Functions

Snowflake External Functions allow invoking third-party service APIs and embedding business logic within SQL queries.

For example, an External Function can call a Machine Learning model on AWS SageMaker directly from a Snowflake query for real-time predictions and enriching data.

These diverse integration mechanisms make Snowflake extremely extensible and enable building complex ETL/ELT pipelines, automation workflows and more.

Snowflake Integration Patterns

While Snowflake provides extensive integration capabilities, actual implementation patterns depend on specific use cases. Common integration patterns include:

– Batch ETL: Extracting data from sources, transforming via ETL tools, and batch loading into Snowflake using COPY or partner connectors. Useful for high-volume, periodic data loads.

– Continuous Replication: Using change data capture (CDC) and streaming mechanisms like Snowpipe to load data continuously as it gets created or changed in sources. Enables low-latency data pipelines.

– Real-time Sync: Leveraging Snowflake Tasks, Streams and External Functions to trigger actions based on new data arrival, implement data validations, consume machine learning models, etc. Provides real-time integration capabilities.

– Analytics Integration: Directly connecting BI and data science platforms like Tableau, Looker, Databricks etc. to Snowflake to consume its data. Maximizes analytics agility.

– Application Integration: Using Snowflake data, results and insights to drive business applications built with platforms like AppSheet, OutSystems etc. Powers data-driven apps.

– Partner Ecosystem Integration: Consuming data, algorithms and services from technology partners like Zepl and TigerGraph to extend Snowflake’s capabilities. Strengthens overall data ecosystem.

The right patterns ultimately depend on your goals, workloads and constraints. A typical Snowflake implementation utilizes a blend of the above patterns.

Snowflake Integration in Action

To see Snowflake integration mechanisms in action, let us walk through a sample use case encompassing batch ETL orchestration and BI integration:

Our e-commerce company has product data in an on-premises MySQL database. The goal is to load this data into Snowflake and enable interactive reporting using Tableau. Here are the steps:

- Create a storage integration for staging data:

- Extract data from MySQL using an ETL tool like Informatica and load JSON files into the S3 staging location.

- Copy data from S3 stage into Snowflake tables using COPY command:

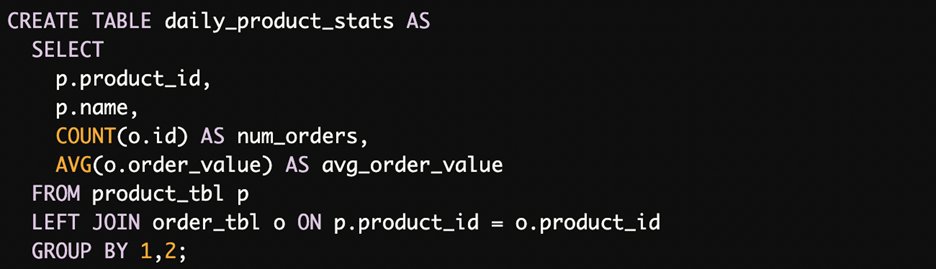

- Transform data if needed using SQL queries on Snowflake tables. For example:

- Connect Tableau to Snowflake and create dashboards visualizing daily product metrics.

- Schedule load jobs using orchestration tools like Airflow to repeat the ETL process periodically.

This demonstrates a batch ETL pattern where Informatica pulls data from MySQL, loads it into Snowflake via S3 staging, followed by BI integration for analysis. The pipeline can be automated via orchestration tools.

Similarly, streaming or real-time patterns are possible by utilizing Snowpipe, Streams, Tasks etc. as per the use case requirements.

Snowflake Integration Best Practices

Based on the above overview, here are some key best practices to follow for optimal Snowflake integration:

– Evaluate both batch and real-time integration patterns depending on your workloads, data volumes, latency needs etc. Blend approaches for maximum efficiency.

– Take advantage of native Snowflake integration mechanisms like Storage Integrations, Snowpipe, Streams, External Functions etc. where possible, before considering third-party tools.

– Ensure proper access control and credential security when connecting external applications or services to Snowflake. Never hardcode credentials.

– Profile source data thoroughly and implement appropriate data validation, corrections and enrichment logic during loading into Snowflake.

– Implement orderly schema evolution and compatibility checking when changing staged data structures.

– Configure Snowflake loading options like parallelism, concurrency and file formats optimally based on data characteristics and loading needs.

– Containerize ETL workflows using Docker and orchestrate using workflow automation tools. Helps with portability, reusability and manageability.

– Monitor dashboards and implement alerts to track key integration metrics like data volumes, pipeline delays, failures etc. Fix issues promptly.

– Follow change management and CI/CD disciplines for integration code/scripts. Maintain proper version control and dev/test/prod environments.

By following these best practices, you can build scalable, reliable and efficient data integration pipelines with Snowflake.

Deep Dive into Snowflake API Integration

In addition to loading and transforming data, Snowflake enables robust integration with external applications and services via its extensive set of open APIs. These REST APIs allow programmatically managing all aspects of the Snowflake account and platform.

The Snowflake API integrations provide:

– Authentication and access control for securely connecting applications to Snowflake

– APIs for administrative operations like managing users, warehouses, databases etc.

– Core data operations such as running queries, loading data etc.

– Metadata management capabilities for programmatic schema access and modifications

– Support for running ETL, orchestration, and data processing jobs

– Asynchronous operations like cloning, unsharing data etc.

– Monitoring APIs to track usage, query history etc.

Let us look at some examples of using Snowflake APIs for key integration tasks:

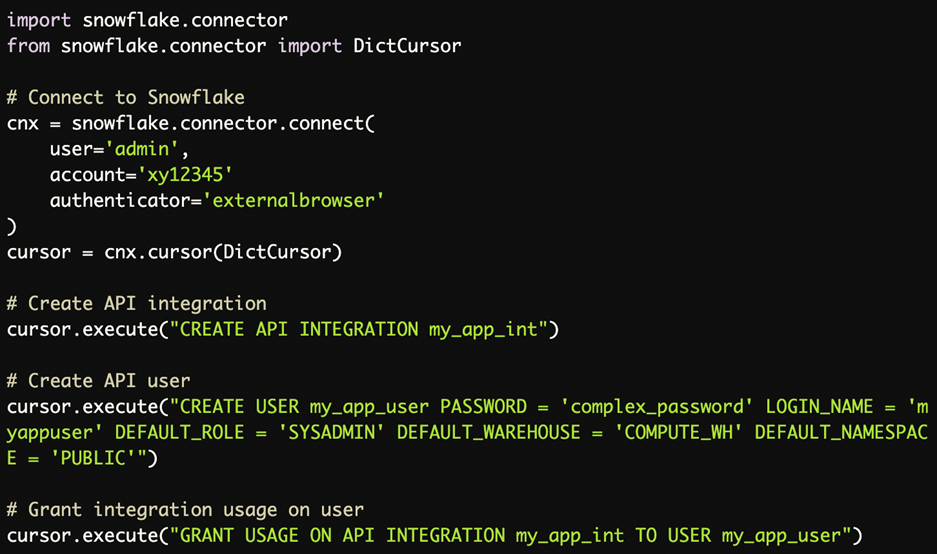

User and Access Management

This creates an API integration, API user and grants integration access to the user for application authentication.

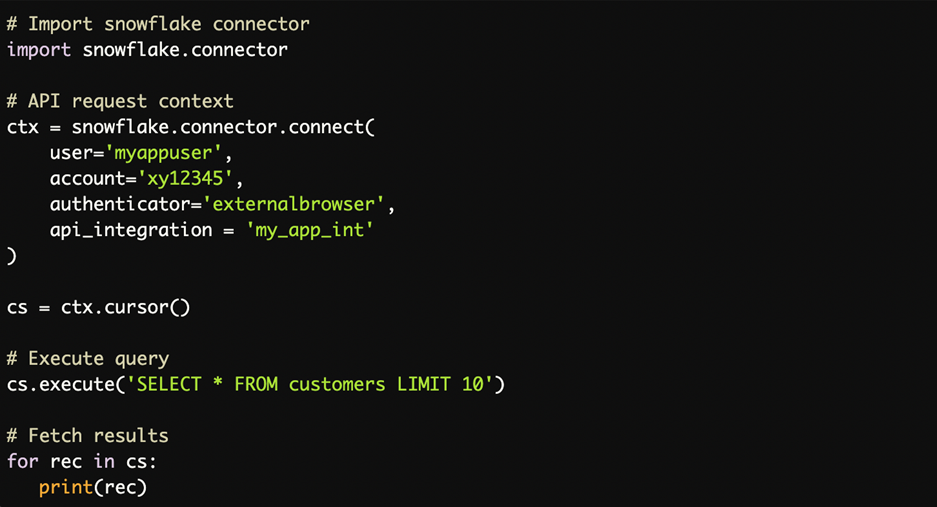

Query Execution

This connects an application to Snowflake API using the API user, and executes a sample query.

Loading and Unloading Data

This enables using Snowflake stage locations and COPY commands via API to load and unload data.

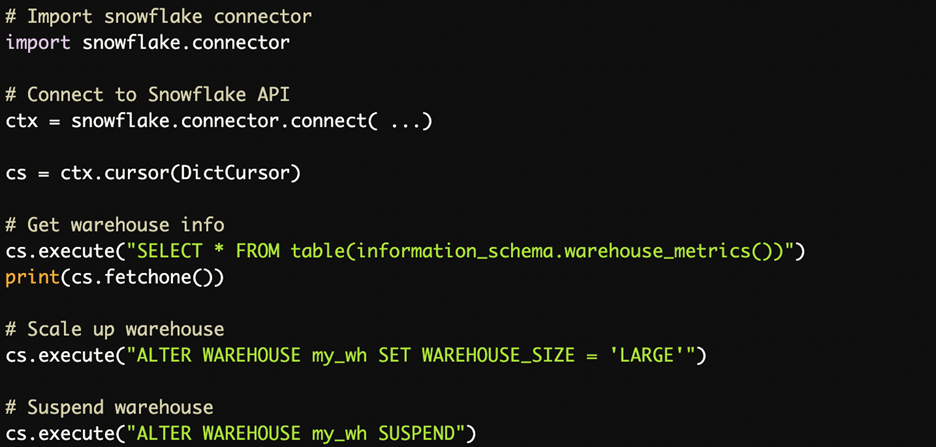

Monitoring and Administration

This illustrates how Snowflake APIs can be used for monitoring and administrative operations like scaling and suspending warehouses.

There are endless possibilities once you start harnessing the power of Snowflake APIs for programmatic automation and integration!

Strategies for Effective Snowflake API Integration

While Snowflake APIs open up many integration possibilities, here are some key strategies for maximizing effectiveness:

– Authenticate via OAuth or integrated identity providers for single sign-on. Never hardcode Snowflake credentials.

– Follow security best practices around key rotation, IP allowlisting, and access control. Protect API keys and tokens.

– Implement request validation, input sanitization and rate limiting on API requests to prevent abuse.

– Handle transient errors, throttling, and retry logic for resilience against failures.

– Set up API monitoring, logging, and alerts to track usage patterns and promptly detect issues.

– Provide SDKs and well-documented APIs for easy integration by developers.

– Support versioning to maintain backwards compatibility as APIs evolve.

– Containerize API workloads for portability across environments.

– Follow API-first design principles for consistent interfaces.

By following these strategies, you can build scalable and robust API-based integration with Snowflake’s data cloud.

Conclusion

Snowflake makes it easy to integrate diverse data sources, locations and application environments onto a single, scalable platform. By following the patterns, technologies and best practices covered in this guide, you can develop robust data integration flows to maximize the value from your Snowflake investment.

The key is approaching integrations holistically – combining batch, real-time, and services oriented strategies based on your workload needs. With Snowflake’s versatility, performance and ecosystem of partners, the possibilities are endless. So get started today on building your modern integrated data architecture!

To learn more and get expert guidance tailored to your use case, check out Beyond Key’s Snowflake consulting services. Our team brings extensive real-world expertise to help you through any stage of your Snowflake journey – from planning and architecture to seamless deployment and ongoing enhancements. Get in touch for a free consultation.